Application Performance Chatbot

Domains Involved

- AI

- Application Monitoring

- Chatbots

- Conversational UI

Using AI as a new tool in our design arsenal, the team solved some nagging IBM Cloud issues with a concept car for a virtual agent for application management.

A Developer on the Inside

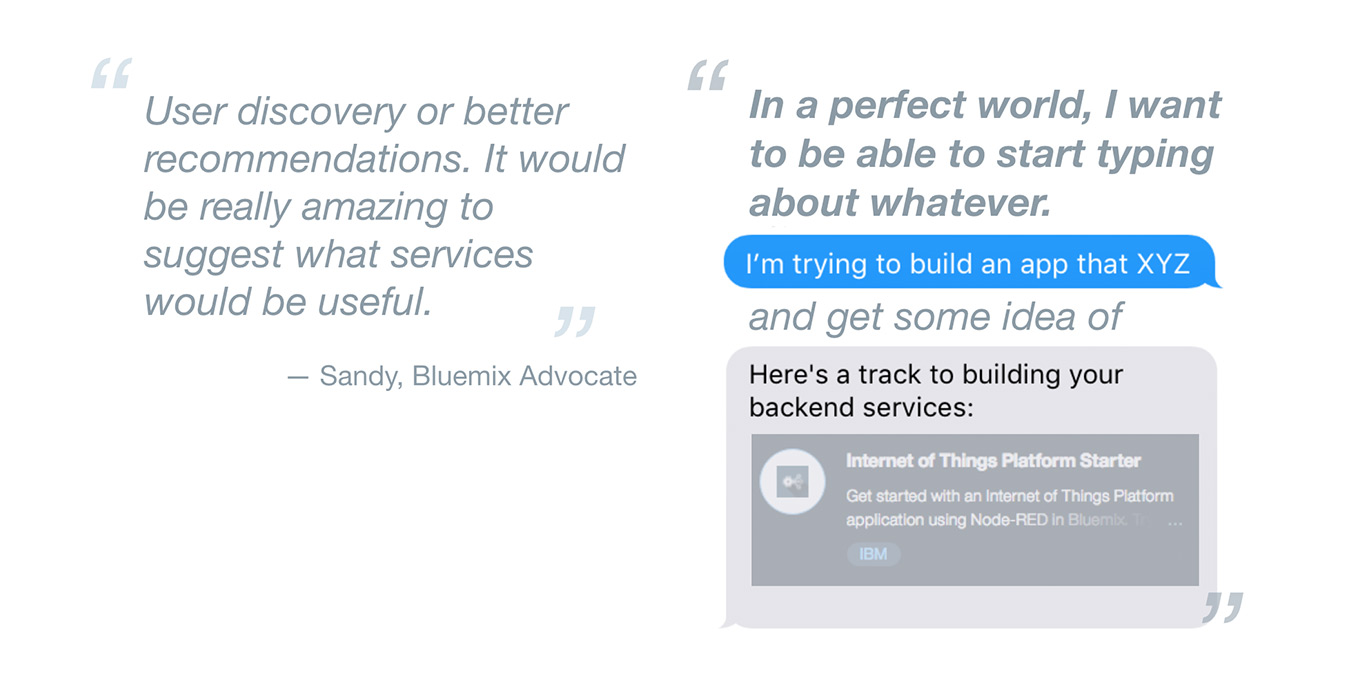

Researching user frustrations across cloud uncovered a difficulty navigating IBM Cloud’s concepts, massive service catalog, nomenclature and often enough cloud in general.

To address this, the team set out to use one of IBM’s most prominent figures, Watson, to scale real time user help with AI. We determined that, if done right, a virtual agent could be like the expert developer on the team that someone could lean their chair back and ask “Hey Watson, you’ve deployed a microservices pipeline using containers, right? How do I…”

While researching issues across IBM’s Cloud Platform, we heard from users who didn’t want to understand our conceptual models, but instead wanted IBM to tell them what they needed. This is where a virtual assistance could scale our support.

Conversational Best Practices

Having a firm understanding of both our user needs and Watson technologies wouldn’t be enough to build an elegant solution. Early ideation uncovered that it would be easy to get conversation wrong. We could irritate users by constantly being present when we weren’t wanted, effectively always being “underfoot.” We could tell users too much at once, overwhelming them. We could fail to tell users what we could do, making interacting with the bot a guessing game. We could fail to return a useful response when the bot didn’t know the answer to a question, making it more of a burden than a help.

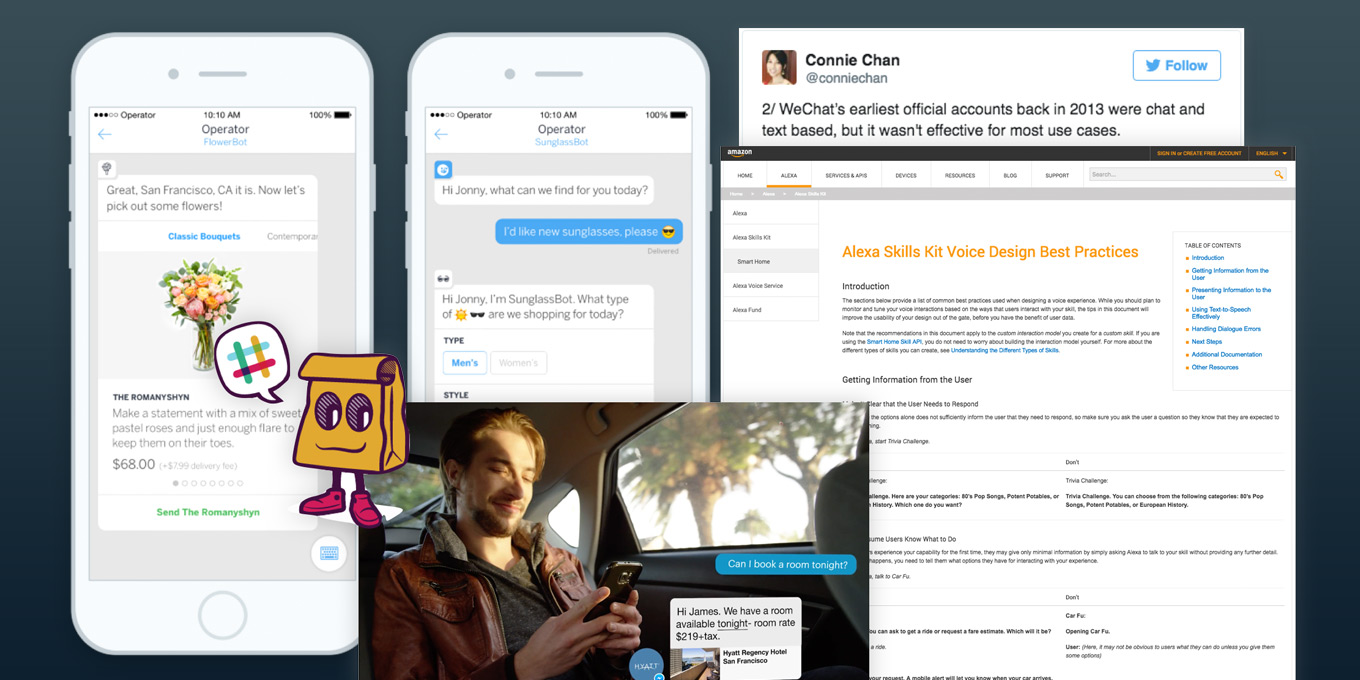

Fortunately, a lot of brilliant people have worked on chatbots. Our strategy was to learn from them before starting a single sketch or talking through our first interaction patterns.

Before jumping into any design, we set out to become domain experts in chatbots and conversational UI to learn from the mistakes and successes of those already in the field.

Focusing the Concept on a Problem

As I said, our research uncovered a large number of issues. In fact, our first ideation session for the virtual agent came up with thirty-two use cases we could apply it to in order to better IBM’s cloud experience. To avoid choking on the solution’s possiblities and start small and learn from there, we had to pick a use case to start with.

We chose to build the chatbot around application management and AI driven anomaly detection. We found that assessment of application health could only be performed by our wealthiest clients and even then it took many different Administrators working together across weeks or months to accomplish. Certainly not in real time.

“Evaporating” Versus “Persistent” Content

We found that there were two aspects to the user’s conversation, the dialog and the content they were asking for. Many times this content was used as reference, often as the dialog continued. To address this, the concept created a UI that would hold on to important, “persistent” content (on the right) while the dialog evaporated harmlessly off to the left side without pushing the necessary content away from the forefront of the UI.

Knowing When to Get Out of the Way

There’s a tendency to get overexcited about the use of a new tool in our interaction tool belt and forget the reason we’re using it. We found from research and in our concepting sessions that we needed to practice restraint with our use of conversation as the way we expected users to take action. Conversation is best used to find the answer to an abstract question in a sea of possibilities and have the system return an answer that would have taken the user days, hours, screens, sites worth of reading and analysis to uncover on their own. It’s like asking for the needle in the haystack.

However, once the user had that needle, further conversation wasn’t necessary. From there, the chat had to present everything the user needed to work with the “needle” and then get out of their way. The designs required “rich cards” complete with all of the info, routes to action, and feedback to response the user needed in order to do what they came for. An example might be asking a co-worker if they knew how to set up rules to filter company email. The coworker could give links to documentation or open up the UI to show where and how it’s done. What the co-worker doesn’t need to do is take over the UI and start asking point by point questions about what rules needed to be created and setting them up in-client. This is as awkward in conversational UI as it is in real life.

Developing a Lean First Delivery

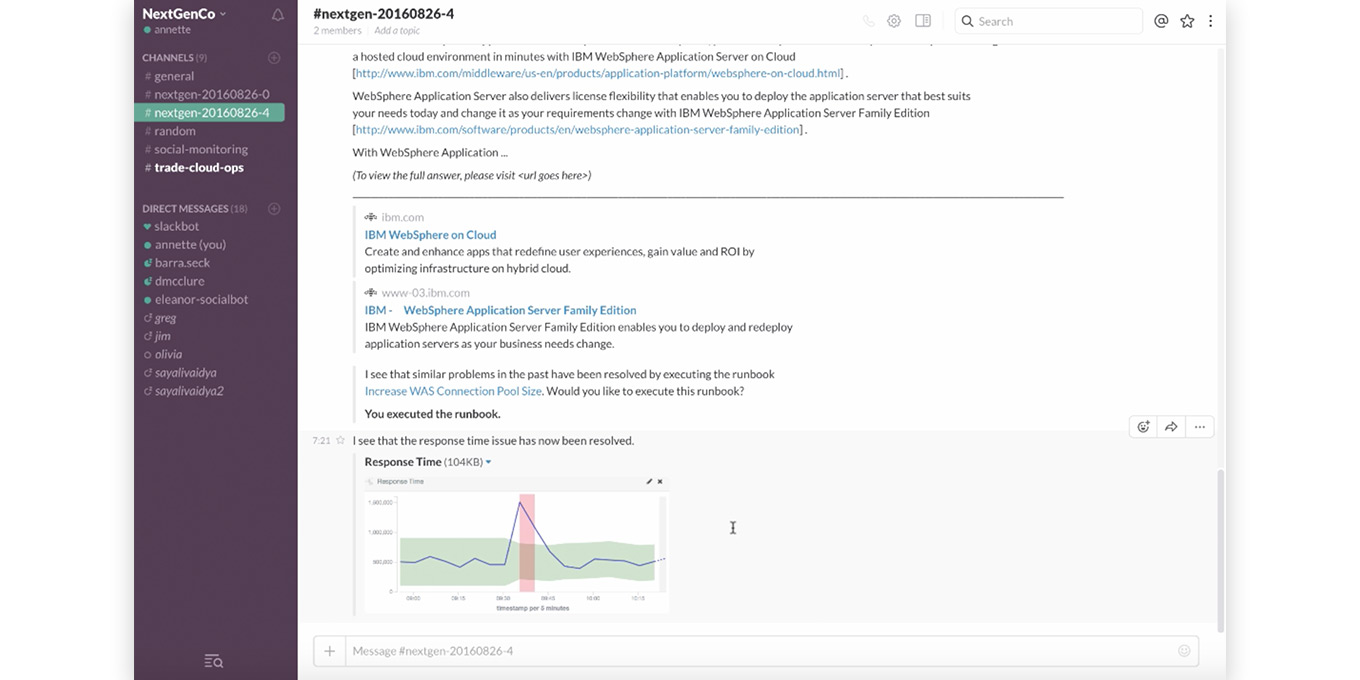

Building a service, maintaining it, and deploying it to users in IBM’s Cloud channels was a commitment. To prove the technology with an MVP, Slack was used to act as a bot delivery pipeline and UI without requiring the team to build out the entire experience. With the Slack deployment, clients could easily integrate the bot into their existing communication channels, and while it didn’t carry every feature our roadmap called for, it would be enough to allow users to get the answers and take the actions they would need to prove the value to their DevOps organization.

Slack’s integrations and bot support presented the perfect opportunity to prove the technology without building out UI and a service delivery pipeline.

I can’t go into further detail about this project publicly, but we can still talk about it.